MiniMax will release the first large model of MoE in China

【数据猿导读】 MiniMax will release the first large model of MoE in China

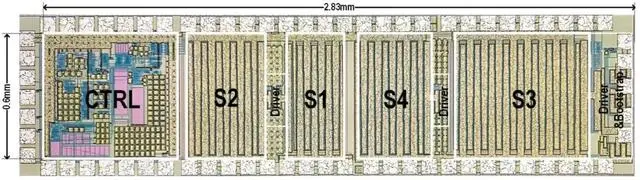

On December 28, Wei Wei, vice president of MiniMax, a large model startup company in China, revealed at a sub-forum of the Digital China Forum and Digital Development Forum that the first large model based on MoE(Mixture of Experts) architecture will be released in the near future, marking OpenAI GPT-4. MoE, which stands for Expert Blending, is a deep learning technique that combines multiple models directly together to speed up model training and achieve better predictive performance. A recent paper published by researchers from Google, UC Berkeley, MIT, and other institutions demonstrates that the combination of MoE and instruction tuning can significantly improve the performance of large language models.

来源:DIYuan

刷新相关文章

我要评论

不容错过的资讯

大家都在搜